LLMs Understand Glass-Box Models, Discover Surprises, and Suggest Repairs

Aug 07, 2023Benjamin J. Lengerich, Sebastian Bordt, Harsha Nori, Mark E. Nunnally, Yin Aphinyanaphongs, Manolis Kellis, Rich Caruana

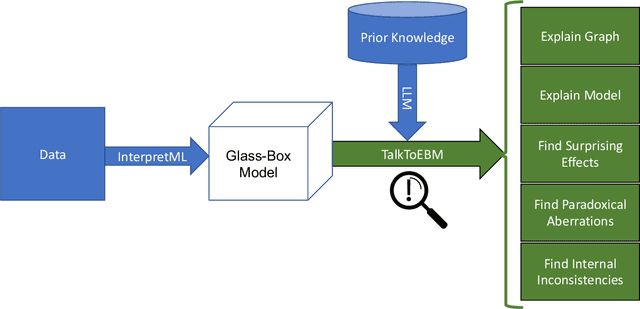

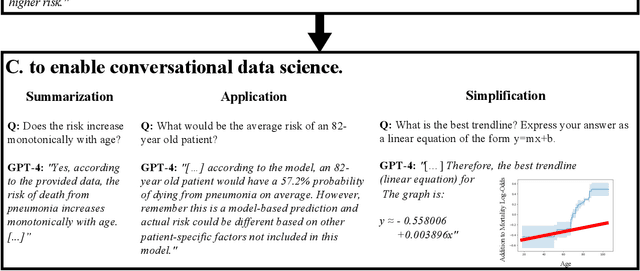

We show that large language models (LLMs) are remarkably good at working with interpretable models that decompose complex outcomes into univariate graph-represented components. By adopting a hierarchical approach to reasoning, LLMs can provide comprehensive model-level summaries without ever requiring the entire model to fit in context. This approach enables LLMs to apply their extensive background knowledge to automate common tasks in data science such as detecting anomalies that contradict prior knowledge, describing potential reasons for the anomalies, and suggesting repairs that would remove the anomalies. We use multiple examples in healthcare to demonstrate the utility of these new capabilities of LLMs, with particular emphasis on Generalized Additive Models (GAMs). Finally, we present the package $\texttt{TalkToEBM}$ as an open-source LLM-GAM interface.

Estimating Discontinuous Time-Varying Risk Factors and Treatment Benefits for COVID-19 with Interpretable ML

Nov 15, 2022Benjamin Lengerich, Mark E. Nunnally, Yin Aphinyanaphongs, Rich Caruana

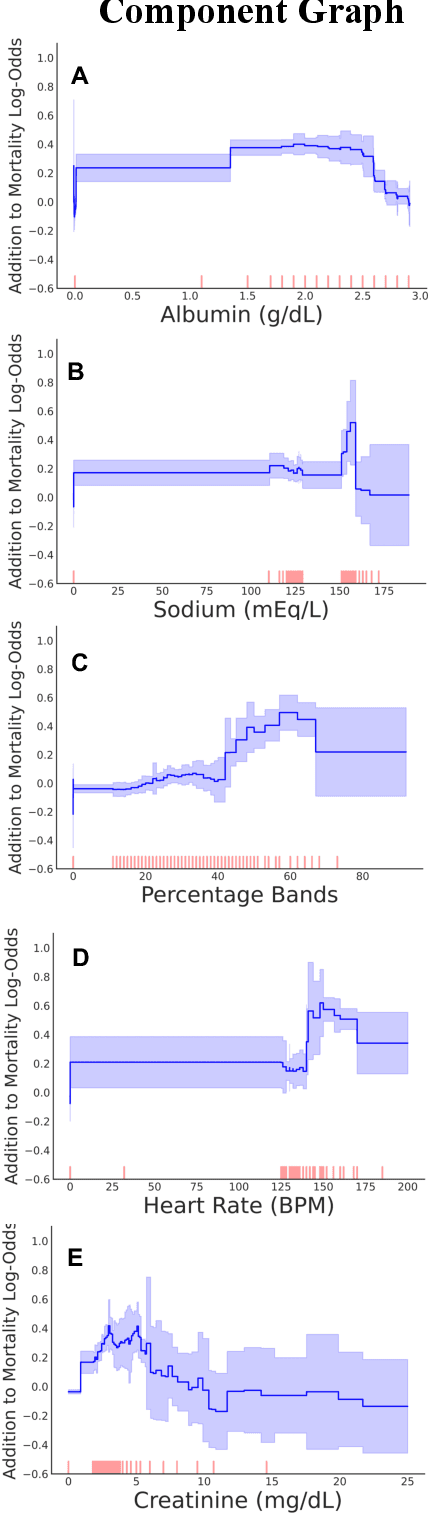

Treatment protocols, disease understanding, and viral characteristics changed over the course of the COVID-19 pandemic; as a result, the risks associated with patient comorbidities and biomarkers also changed. We add to the conversation regarding inflammation, hemostasis and vascular function in COVID-19 by performing a time-varying observational analysis of over 4000 patients hospitalized for COVID-19 in a New York City hospital system from March 2020 to August 2021. To perform this analysis, we apply tree-based generalized additive models with temporal interactions which recover discontinuous risk changes caused by discrete protocols changes. We find that the biomarkers of thrombosis increasingly predicted mortality from March 2020 to August 2021, while the association between biomarkers of inflammation and thrombosis weakened. Beyond COVID-19, this presents a straightforward methodology to estimate unknown and discontinuous time-varying effects.

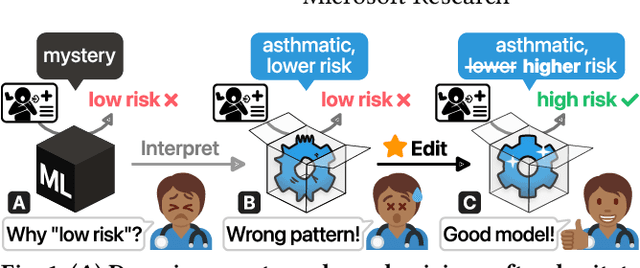

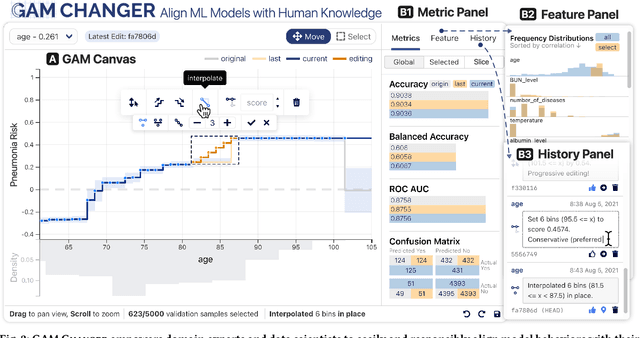

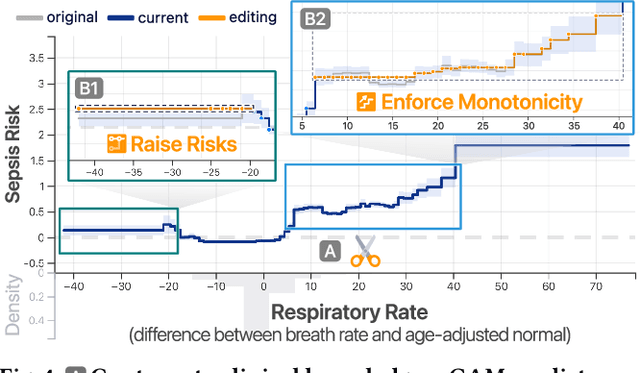

Interpretability, Then What? Editing Machine Learning Models to Reflect Human Knowledge and Values

Jun 30, 2022Zijie J. Wang, Alex Kale, Harsha Nori, Peter Stella, Mark E. Nunnally, Duen Horng Chau, Mihaela Vorvoreanu, Jennifer Wortman Vaughan, Rich Caruana

Machine learning (ML) interpretability techniques can reveal undesirable patterns in data that models exploit to make predictions--potentially causing harms once deployed. However, how to take action to address these patterns is not always clear. In a collaboration between ML and human-computer interaction researchers, physicians, and data scientists, we develop GAM Changer, the first interactive system to help domain experts and data scientists easily and responsibly edit Generalized Additive Models (GAMs) and fix problematic patterns. With novel interaction techniques, our tool puts interpretability into action--empowering users to analyze, validate, and align model behaviors with their knowledge and values. Physicians have started to use our tool to investigate and fix pneumonia and sepsis risk prediction models, and an evaluation with 7 data scientists working in diverse domains highlights that our tool is easy to use, meets their model editing needs, and fits into their current workflows. Built with modern web technologies, our tool runs locally in users' web browsers or computational notebooks, lowering the barrier to use. GAM Changer is available at the following public demo link: https://interpret.ml/gam-changer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge