Training-Free Unsupervised Prompt for Vision-Language Models

Apr 25, 2024Sifan Long, Linbin Wang, Zhen Zhao, Zichang Tan, Yiming Wu, Shengsheng Wang, Jingdong Wang

Prompt learning has become the most effective paradigm for adapting large pre-trained vision-language models (VLMs) to downstream tasks. Recently, unsupervised prompt tuning methods, such as UPL and POUF, directly leverage pseudo-labels as supervisory information to fine-tune additional adaptation modules on unlabeled data. However, inaccurate pseudo labels easily misguide the tuning process and result in poor representation capabilities. In light of this, we propose Training-Free Unsupervised Prompts (TFUP), which maximally preserves the inherent representation capabilities and enhances them with a residual connection to similarity-based prediction probabilities in a training-free and labeling-free manner. Specifically, we integrate both instance confidence and prototype scores to select representative samples, which are used to customize a reliable Feature Cache Model (FCM) for training-free inference. Then, we design a Multi-level Similarity Measure (MSM) that considers both feature-level and semantic-level similarities to calculate the distance between each test image and the cached sample as the weight of the corresponding cached label to generate similarity-based prediction probabilities. In this way, TFUP achieves surprising performance, even surpassing the training-base method on multiple classification datasets. Based on our TFUP, we propose a training-based approach (TFUP-T) to further boost the adaptation performance. In addition to the standard cross-entropy loss, TFUP-T adopts an additional marginal distribution entropy loss to constrain the model from a global perspective. Our TFUP-T achieves new state-of-the-art classification performance compared to unsupervised and few-shot adaptation approaches on multiple benchmarks. In particular, TFUP-T improves the classification accuracy of POUF by 3.3% on the most challenging Domain-Net dataset.

Unsupervised Sentence Representation Learning with Frequency-induced Adversarial Tuning and Incomplete Sentence Filtering

May 15, 2023Bing Wang, Ximing Li, Zhiyao Yang, Yuanyuan Guan, Jiayin Li, Shengsheng Wang

Pre-trained Language Model (PLM) is nowadays the mainstay of Unsupervised Sentence Representation Learning (USRL). However, PLMs are sensitive to the frequency information of words from their pre-training corpora, resulting in anisotropic embedding space, where the embeddings of high-frequency words are clustered but those of low-frequency words disperse sparsely. This anisotropic phenomenon results in two problems of similarity bias and information bias, lowering the quality of sentence embeddings. To solve the problems, we fine-tune PLMs by leveraging the frequency information of words and propose a novel USRL framework, namely Sentence Representation Learning with Frequency-induced Adversarial tuning and Incomplete sentence filtering (SLT-FAI). We calculate the word frequencies over the pre-training corpora of PLMs and assign words thresholding frequency labels. With them, (1) we incorporate a similarity discriminator used to distinguish the embeddings of high-frequency and low-frequency words, and adversarially tune the PLM with it, enabling to achieve uniformly frequency-invariant embedding space; and (2) we propose a novel incomplete sentence detection task, where we incorporate an information discriminator to distinguish the embeddings of original sentences and incomplete sentences by randomly masking several low-frequency words, enabling to emphasize the more informative low-frequency words. Our SLT-FAI is a flexible and plug-and-play framework, and it can be integrated with existing USRL techniques. We evaluate SLT-FAI with various backbones on benchmark datasets. Empirical results indicate that SLT-FAI can be superior to the existing USRL baselines. Our code is released in \url{https://github.com/wangbing1416/SLT-FAI}.

Task-Oriented Multi-Modal Mutual Leaning for Vision-Language Models

Mar 30, 2023Sifan Long, Zhen Zhao, Junkun Yuan, Zichang Tan, Jiangjiang Liu, Luping Zhou, Shengsheng Wang, Jingdong Wang

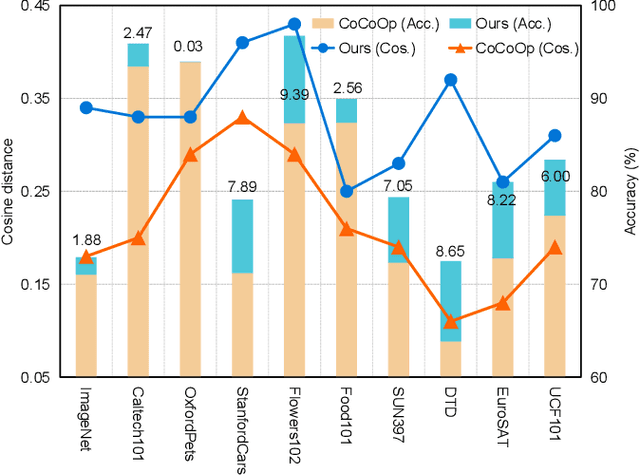

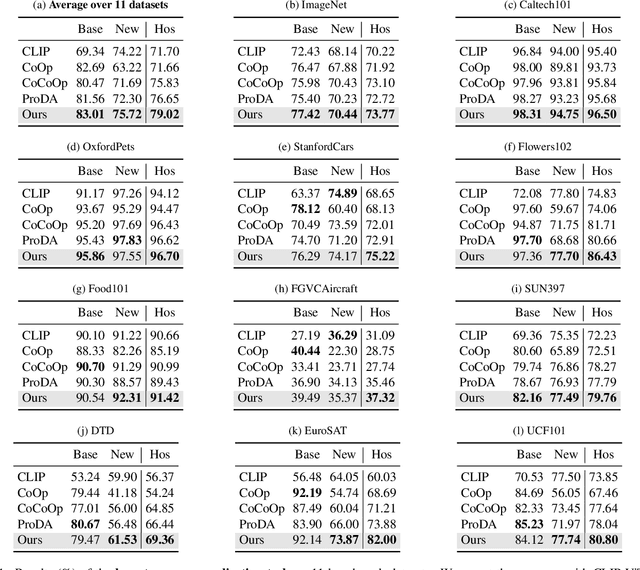

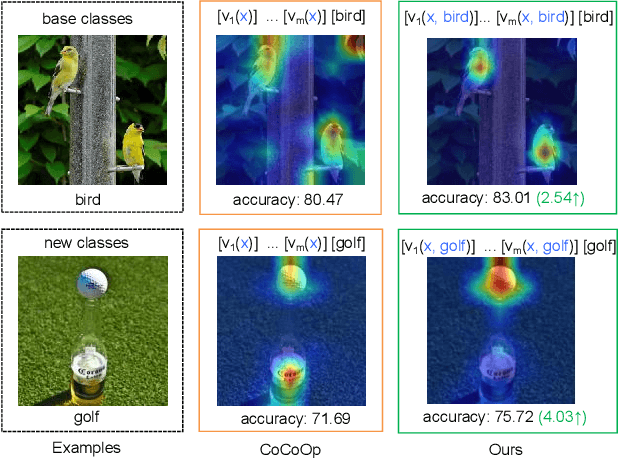

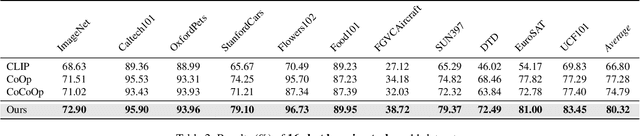

Prompt learning has become one of the most efficient paradigms for adapting large pre-trained vision-language models to downstream tasks. Current state-of-the-art methods, like CoOp and ProDA, tend to adopt soft prompts to learn an appropriate prompt for each specific task. Recent CoCoOp further boosts the base-to-new generalization performance via an image-conditional prompt. However, it directly fuses identical image semantics to prompts of different labels and significantly weakens the discrimination among different classes as shown in our experiments. Motivated by this observation, we first propose a class-aware text prompt (CTP) to enrich generated prompts with label-related image information. Unlike CoCoOp, CTP can effectively involve image semantics and avoid introducing extra ambiguities into different prompts. On the other hand, instead of reserving the complete image representations, we propose text-guided feature tuning (TFT) to make the image branch attend to class-related representation. A contrastive loss is employed to align such augmented text and image representations on downstream tasks. In this way, the image-to-text CTP and text-to-image TFT can be mutually promoted to enhance the adaptation of VLMs for downstream tasks. Extensive experiments demonstrate that our method outperforms the existing methods by a significant margin. Especially, compared to CoCoOp, we achieve an average improvement of 4.03% on new classes and 3.19% on harmonic-mean over eleven classification benchmarks.

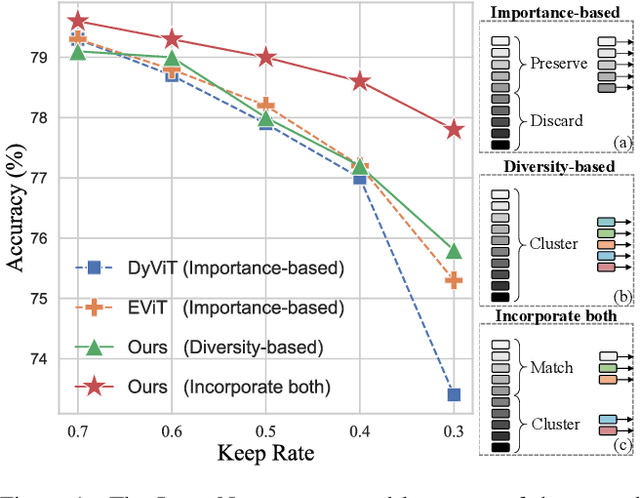

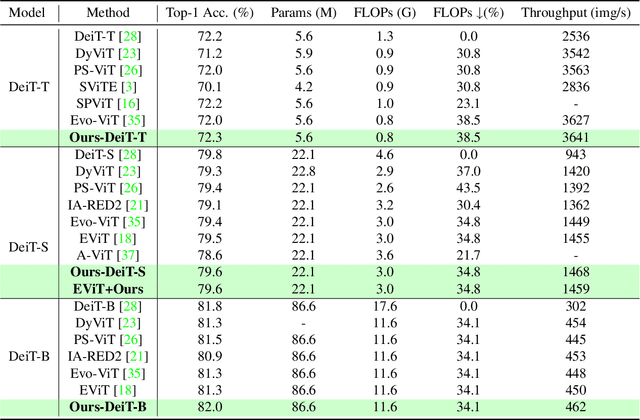

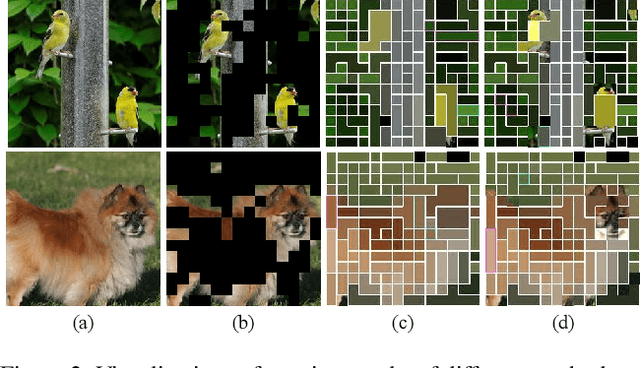

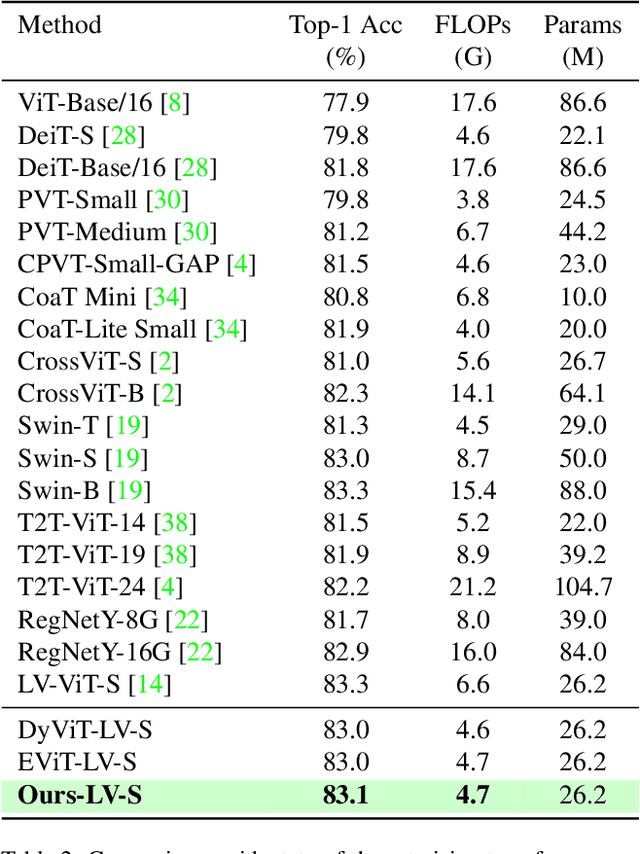

Beyond Attentive Tokens: Incorporating Token Importance and Diversity for Efficient Vision Transformers

Nov 21, 2022Sifan Long, Zhen Zhao, Jimin Pi, Shengsheng Wang, Jingdong Wang

Vision transformers have achieved significant improvements on various vision tasks but their quadratic interactions between tokens significantly reduce computational efficiency. Many pruning methods have been proposed to remove redundant tokens for efficient vision transformers recently. However, existing studies mainly focus on the token importance to preserve local attentive tokens but completely ignore the global token diversity. In this paper, we emphasize the cruciality of diverse global semantics and propose an efficient token decoupling and merging method that can jointly consider the token importance and diversity for token pruning. According to the class token attention, we decouple the attentive and inattentive tokens. In addition to preserving the most discriminative local tokens, we merge similar inattentive tokens and match homogeneous attentive tokens to maximize the token diversity. Despite its simplicity, our method obtains a promising trade-off between model complexity and classification accuracy. On DeiT-S, our method reduces the FLOPs by 35% with only a 0.2% accuracy drop. Notably, benefiting from maintaining the token diversity, our method can even improve the accuracy of DeiT-T by 0.1% after reducing its FLOPs by 40%.

Next-item Recommendations in Short Sessions

Jul 20, 2021Wenzhuo Song, Shoujin Wang, Yan Wang, Shengsheng Wang

The changing preferences of users towards items trigger the emergence of session-based recommender systems (SBRSs), which aim to model the dynamic preferences of users for next-item recommendations. However, most of the existing studies on SBRSs are based on long sessions only for recommendations, ignoring short sessions, though short sessions, in fact, account for a large proportion in most of the real-world datasets. As a result, the applicability of existing SBRSs solutions is greatly reduced. In a short session, quite limited contextual information is available, making the next-item recommendation very challenging. To this end, in this paper, inspired by the success of few-shot learning (FSL) in effectively learning a model with limited instances, we formulate the next-item recommendation as an FSL problem. Accordingly, following the basic idea of a representative approach for FSL, i.e., meta-learning, we devise an effective SBRS called INter-SEssion collaborative Recommender netTwork (INSERT) for next-item recommendations in short sessions. With the carefully devised local module and global module, INSERT is able to learn an optimal preference representation of the current user in a given short session. In particular, in the global module, a similar session retrieval network (SSRN) is designed to find out the sessions similar to the current short session from the historical sessions of both the current user and other users, respectively. The obtained similar sessions are then utilized to complement and optimize the preference representation learned from the current short session by the local module for more accurate next-item recommendations in this short session. Extensive experiments conducted on two real-world datasets demonstrate the superiority of our proposed INSERT over the state-of-the-art SBRSs when making next-item recommendations in short sessions.

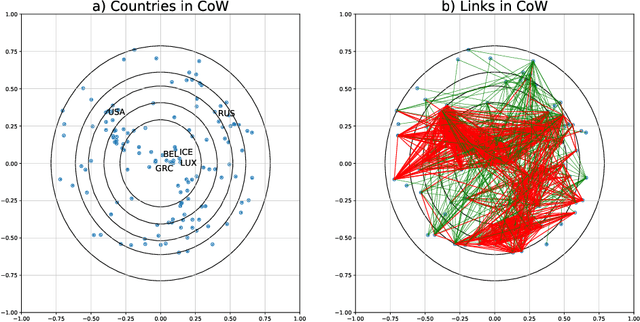

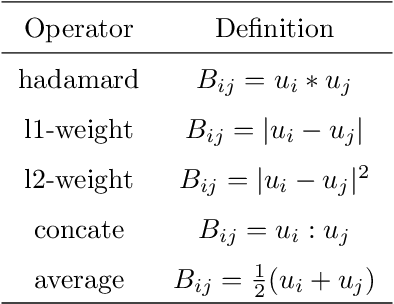

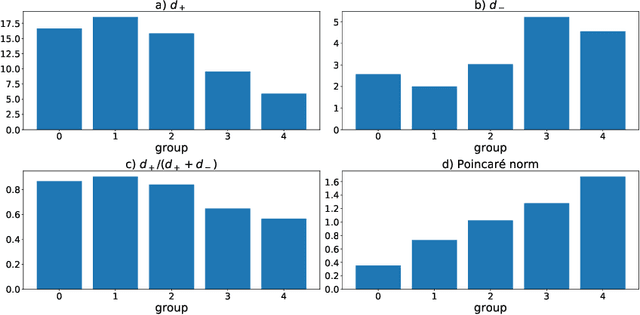

Hyperbolic Node Embedding for Signed Networks

Oct 29, 2019Wenzhuo Song, Shengsheng Wang

The rapid evolving World Wide Web has produced a large amount of complex and heterogeneous network data. To facilitate network analysis algorithms, signed network embedding methods automatically learn feature vectors of nodes in signed networks. However, existing algorithms only managed to embed the networks into Euclidean spaces, although many features of signed networks reported are more suitable for non-Euclidean space. Besides, previous works also do not consider the hierarchical organization of networks, which is widely existed in real-world networks. In this work, we investigate the problem of whether the hyperbolic space is a better choice to represent signed networks. We develop a non-Euclidean signed network embedding method based on structural balance theory and Riemannian optimization. Our method embeds signed networks into a Poincar\'e ball, which is a hyperbolic space can be seen as a continuous tree. This feature enables our approach to capture underlying hierarchical structure in signed networks. We empirically compare our method with three Euclidean-based baselines in visualization, sign prediction, and reconstruction tasks on six real-world datasets. The results show that our hyperbolic embedding performs better than Euclidean counterparts and can extract a meaningful latent hierarchical structure from signed networks.

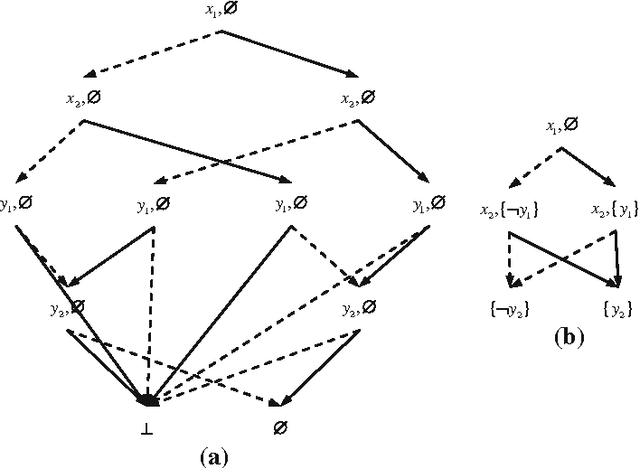

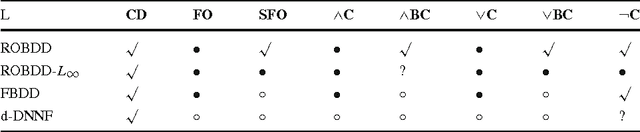

Reduced Ordered Binary Decision Diagram with Implied Literals: A New knowledge Compilation Approach

Mar 24, 2011Yong Lai, Dayou Liu, Shengsheng Wang

Knowledge compilation is an approach to tackle the computational intractability of general reasoning problems. According to this approach, knowledge bases are converted off-line into a target compilation language which is tractable for on-line querying. Reduced ordered binary decision diagram (ROBDD) is one of the most influential target languages. We generalize ROBDD by associating some implied literals in each node and the new language is called reduced ordered binary decision diagram with implied literals (ROBDD-L). Then we discuss a kind of subsets of ROBDD-L called ROBDD-i with precisely i implied literals (0 \leq i \leq \infty). In particular, ROBDD-0 is isomorphic to ROBDD; ROBDD-\infty requires that each node should be associated by the implied literals as many as possible. We show that ROBDD-i has uniqueness over some specific variables order, and ROBDD-\infty is the most succinct subset in ROBDD-L and can meet most of the querying requirements involved in the knowledge compilation map. Finally, we propose an ROBDD-i compilation algorithm for any i and a ROBDD-\infty compilation algorithm. Based on them, we implement a ROBDD-L package called BDDjLu and then get some conclusions from preliminary experimental results: ROBDD-\infty is obviously smaller than ROBDD for all benchmarks; ROBDD-\infty is smaller than the d-DNNF the benchmarks whose compilation results are relatively small; it seems that it is better to transform ROBDDs-\infty into FBDDs and ROBDDs rather than straight compile the benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge