Wilsonian Renormalization of Neural Network Gaussian Processes

May 09, 2024Jessica N. Howard, Ro Jefferson, Anindita Maiti, Zohar Ringel

Separating relevant and irrelevant information is key to any modeling process or scientific inquiry. Theoretical physics offers a powerful tool for achieving this in the form of the renormalization group (RG). Here we demonstrate a practical approach to performing Wilsonian RG in the context of Gaussian Process (GP) Regression. We systematically integrate out the unlearnable modes of the GP kernel, thereby obtaining an RG flow of the Gaussian Process in which the data plays the role of the energy scale. In simple cases, this results in a universal flow of the ridge parameter, which becomes input-dependent in the richer scenario in which non-Gaussianities are included. In addition to being analytically tractable, this approach goes beyond structural analogies between RG and neural networks by providing a natural connection between RG flow and learnable vs. unlearnable modes. Studying such flows may improve our understanding of feature learning in deep neural networks, and identify potential universality classes in these models.

Towards Understanding Inductive Bias in Transformers: A View From Infinity

Feb 07, 2024Itay Lavie, Guy Gur-Ari, Zohar Ringel

We study inductive bias in Transformers in the infinitely over-parameterized Gaussian process limit and argue transformers tend to be biased towards more permutation symmetric functions in sequence space. We show that the representation theory of the symmetric group can be used to give quantitative analytical predictions when the dataset is symmetric to permutations between tokens. We present a simplified transformer block and solve the model at the limit, including accurate predictions for the learning curves and network outputs. We show that in common setups, one can derive tight bounds in the form of a scaling law for the learnability as a function of the context length. Finally, we argue WikiText dataset, does indeed possess a degree of permutation symmetry.

Droplets of Good Representations: Grokking as a First Order Phase Transition in Two Layer Networks

Oct 05, 2023Noa Rubin, Inbar Seroussi, Zohar Ringel

A key property of deep neural networks (DNNs) is their ability to learn new features during training. This intriguing aspect of deep learning stands out most clearly in recently reported Grokking phenomena. While mainly reflected as a sudden increase in test accuracy, Grokking is also believed to be a beyond lazy-learning/Gaussian Process (GP) phenomenon involving feature learning. Here we apply a recent development in the theory of feature learning, the adaptive kernel approach, to two teacher-student models with cubic-polynomial and modular addition teachers. We provide analytical predictions on feature learning and Grokking properties of these models and demonstrate a mapping between Grokking and the theory of phase transitions. We show that after Grokking, the state of the DNN is analogous to the mixed phase following a first-order phase transition. In this mixed phase, the DNN generates useful internal representations of the teacher that are sharply distinct from those before the transition.

Speed Limits for Deep Learning

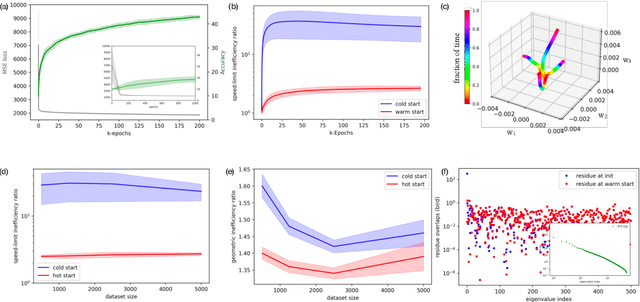

Jul 27, 2023Inbar Seroussi, Alexander A. Alemi, Moritz Helias, Zohar Ringel

State-of-the-art neural networks require extreme computational power to train. It is therefore natural to wonder whether they are optimally trained. Here we apply a recent advancement in stochastic thermodynamics which allows bounding the speed at which one can go from the initial weight distribution to the final distribution of the fully trained network, based on the ratio of their Wasserstein-2 distance and the entropy production rate of the dynamical process connecting them. Considering both gradient-flow and Langevin training dynamics, we provide analytical expressions for these speed limits for linear and linearizable neural networks e.g. Neural Tangent Kernel (NTK). Remarkably, given some plausible scaling assumptions on the NTK spectra and spectral decomposition of the labels -- learning is optimal in a scaling sense. Our results are consistent with small-scale experiments with Convolutional Neural Networks (CNNs) and Fully Connected Neural networks (FCNs) on CIFAR-10, showing a short highly non-optimal regime followed by a longer optimal regime.

Spectral-Bias and Kernel-Task Alignment in Physically Informed Neural Networks

Jul 12, 2023Inbar Seroussi, Asaf Miron, Zohar Ringel

Physically informed neural networks (PINNs) are a promising emerging method for solving differential equations. As in many other deep learning approaches, the choice of PINN design and training protocol requires careful craftsmanship. Here, we suggest a comprehensive theoretical framework that sheds light on this important problem. Leveraging an equivalence between infinitely over-parameterized neural networks and Gaussian process regression (GPR), we derive an integro-differential equation that governs PINN prediction in the large data-set limit -- the Neurally-Informed Equation (NIE). This equation augments the original one by a kernel term reflecting architecture choices and allows quantifying implicit bias induced by the network via a spectral decomposition of the source term in the original differential equation.

Separation of scales and a thermodynamic description of feature learning in some CNNs

Dec 31, 2021Inbar Seroussi, Zohar Ringel

Deep neural networks (DNNs) are powerful tools for compressing and distilling information. Due to their scale and complexity, often involving billions of inter-dependent internal degrees of freedom, exact analysis approaches often fall short. A common strategy in such cases is to identify slow degrees of freedom that average out the erratic behavior of the underlying fast microscopic variables. Here, we identify such a separation of scales occurring in over-parameterized deep convolutional neural networks (CNNs) at the end of training. It implies that neuron pre-activations fluctuate in a nearly Gaussian manner with a deterministic latent kernel. While for CNNs with infinitely many channels these kernels are inert, for finite CNNs they adapt and learn from data in an analytically tractable manner. The resulting thermodynamic theory of deep learning yields accurate predictions on several deep non-linear CNN toy models. In addition, it provides new ways of analyzing and understanding CNNs.

A self consistent theory of Gaussian Processes captures feature learning effects in finite CNNs

Jun 08, 2021Gadi Naveh, Zohar Ringel

Deep neural networks (DNNs) in the infinite width/channel limit have received much attention recently, as they provide a clear analytical window to deep learning via mappings to Gaussian Processes (GPs). Despite its theoretical appeal, this viewpoint lacks a crucial ingredient of deep learning in finite DNNs, laying at the heart of their success -- feature learning. Here we consider DNNs trained with noisy gradient descent on a large training set and derive a self consistent Gaussian Process theory accounting for strong finite-DNN and feature learning effects. Applying this to a toy model of a two-layer linear convolutional neural network (CNN) shows good agreement with experiments. We further identify, both analytical and numerically, a sharp transition between a feature learning regime and a lazy learning regime in this model. Strong finite-DNN effects are also derived for a non-linear two-layer fully connected network. Our self consistent theory provides a rich and versatile analytical framework for studying feature learning and other non-lazy effects in finite DNNs.

Predicting the outputs of finite networks trained with noisy gradients

Apr 02, 2020Gadi Naveh, Oded Ben-David, Haim Sompolinsky, Zohar Ringel

A recent line of studies has focused on the infinite width limit of deep neural networks (DNNs) where, under a certain deterministic training protocol, the DNN outputs are related to a Gaussian Process (GP) known as the Neural Tangent Kernel (NTK). However, finite-width DNNs differ from GPs quantitatively and for CNNs the difference may be qualitative. Here we present a DNN training protocol involving noise whose outcome is mappable to a certain non-Gaussian stochastic process. An analytical framework is then introduced to analyze this resulting non-Gaussian process, whose deviation from a GP is controlled by the finite width. Our work extends upon previous relations between DNNs and GPs in several ways: (a) In the infinite width limit, it establishes a mapping between DNNs and a GP different from the NTK. (b) It allows computing analytically the general form of the finite width correction (FWC) for DNNs with arbitrary activation functions and depth and further provides insight on the magnitude and implications of these FWCs. (c) It appears capable of providing better performance than the corresponding GP in the case of CNNs. We are able to predict the outputs of empirical finite networks with high accuracy, improving upon the accuracy of GP predictions by over an order of magnitude. Overall, we provide a framework that offers both an analytical handle and a more faithful model of real-world settings than previous studies in this avenue of research.

Learning Curves for Deep Neural Networks: A Gaussian Field Theory Perspective

Jun 12, 2019Omry Cohen, Or Malka, Zohar Ringel

A series of recent works suggest that deep neural networks (DNNs), of fixed depth, are equivalent to certain Gaussian Processes (NNGP/NTK) in the highly over-parameterized regime (width or number-of-channels going to infinity). Other works suggest that this limit is relevant for real-world DNNs. These results invite further study into the generalization properties of Gaussian Processes of the NNGP and NTK type. Here we make several contributions along this line. First, we develop a formalism, based on field theory tools, for calculating learning curves perturbatively in one over the dataset size. For the case of NNGPs, this formalism naturally extends to finite width corrections. Second, in cases where one can diagonalize the covariance-function of the NNGP/NTK, we provide analytic expressions for the asymptotic learning curves of any given target function. These go beyond the standard equivalence kernel results. Last, we provide closed analytic expressions for the eigenvalues of NNGP/NTK kernels of depth 2 fully-connected ReLU networks. For datasets on the hypersphere, the eigenfunctions of such kernels, at any depth, are hyperspherical harmonics. A simple coherent picture emerges wherein fully-connected DNNs have a strong entropic bias towards functions which are low order polynomials of the input.

The role of a layer in deep neural networks: a Gaussian Process perspective

Feb 06, 2019Oded Ben-David, Zohar Ringel

A fundamental question in deep learning concerns the role played by individual layers in a deep neural network (DNN) and the transferable properties of the data representations which they learn. To the extent that layers have clear roles one should be able to optimize them separately using layer-wise loss functions. Such loss functions would describe what is the set of good data representations at each depth of the network and provide a target for layer-wise greedy optimization (LEGO). Here we introduce the Deep Gaussian Layer-wise loss functions (DGLs) which, we believe, are the first supervised layer-wise loss functions which are both explicit and competitive in terms of accuracy. The DGLs have a solid theoretical foundation, they become exact for wide DNNs, and we find that they can monitor standard end-to-end training. Being highly structured and symmetric, the DGLs provide a promising analytic route to understanding the internal representations generated by DNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge