Percentile Criterion Optimization in Offline Reinforcement Learning

Apr 07, 2024Elita A. Lobo, Cyrus Cousins, Yair Zick, Marek Petrik

In reinforcement learning, robust policies for high-stakes decision-making problems with limited data are usually computed by optimizing the \emph{percentile criterion}. The percentile criterion is approximately solved by constructing an \emph{ambiguity set} that contains the true model with high probability and optimizing the policy for the worst model in the set. Since the percentile criterion is non-convex, constructing ambiguity sets is often challenging. Existing work uses \emph{Bayesian credible regions} as ambiguity sets, but they are often unnecessarily large and result in learning overly conservative policies. To overcome these shortcomings, we propose a novel Value-at-Risk based dynamic programming algorithm to optimize the percentile criterion without explicitly constructing any ambiguity sets. Our theoretical and empirical results show that our algorithm implicitly constructs much smaller ambiguity sets and learns less conservative robust policies.

Data Poisoning Attacks on Off-Policy Policy Evaluation Methods

Apr 06, 2024Elita Lobo, Harvineet Singh, Marek Petrik, Cynthia Rudin, Himabindu Lakkaraju

Off-policy Evaluation (OPE) methods are a crucial tool for evaluating policies in high-stakes domains such as healthcare, where exploration is often infeasible, unethical, or expensive. However, the extent to which such methods can be trusted under adversarial threats to data quality is largely unexplored. In this work, we make the first attempt at investigating the sensitivity of OPE methods to marginal adversarial perturbations to the data. We design a generic data poisoning attack framework leveraging influence functions from robust statistics to carefully construct perturbations that maximize error in the policy value estimates. We carry out extensive experimentation with multiple healthcare and control datasets. Our results demonstrate that many existing OPE methods are highly prone to generating value estimates with large errors when subject to data poisoning attacks, even for small adversarial perturbations. These findings question the reliability of policy values derived using OPE methods and motivate the need for developing OPE methods that are statistically robust to train-time data poisoning attacks.

A Convex Relaxation Approach to Bayesian Regret Minimization in Offline Bandits

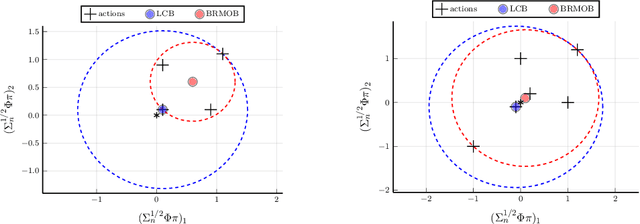

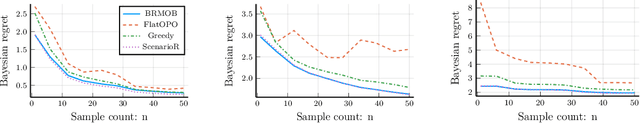

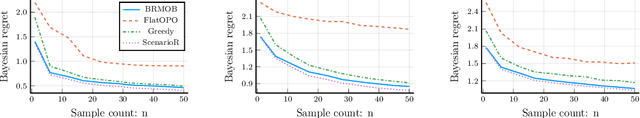

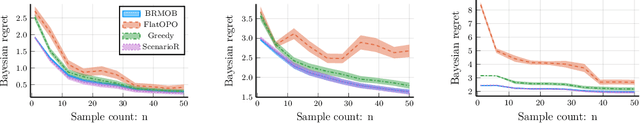

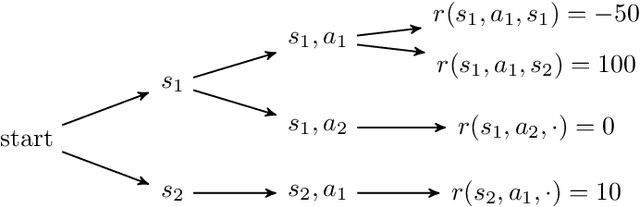

Jun 02, 2023Mohammad Ghavamzadeh, Marek Petrik, Guy Tennenholtz

Algorithms for offline bandits must optimize decisions in uncertain environments using only offline data. A compelling and increasingly popular objective in offline bandits is to learn a policy which achieves low Bayesian regret with high confidence. An appealing approach to this problem, inspired by recent offline reinforcement learning results, is to maximize a form of lower confidence bound (LCB). This paper proposes a new approach that directly minimizes upper bounds on Bayesian regret using efficient conic optimization solvers. Our bounds build on connections among Bayesian regret, Value-at-Risk (VaR), and chance-constrained optimization. Compared to prior work, our algorithm attains superior theoretical offline regret bounds and better results in numerical simulations. Finally, we provide some evidence that popular LCB-style algorithms may be unsuitable for minimizing Bayesian regret in offline bandits.

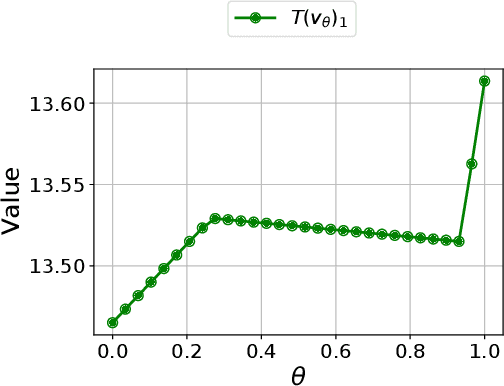

On Dynamic Program Decompositions of Static Risk Measures

Apr 24, 2023Jia Lin Hau, Erick Delage, Mohammad Ghavamzadeh, Marek Petrik

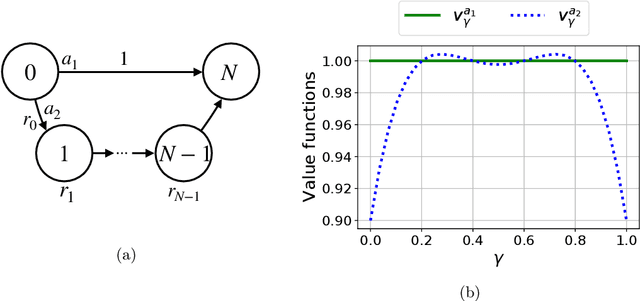

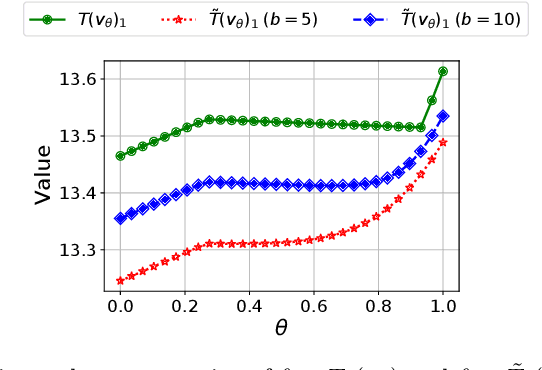

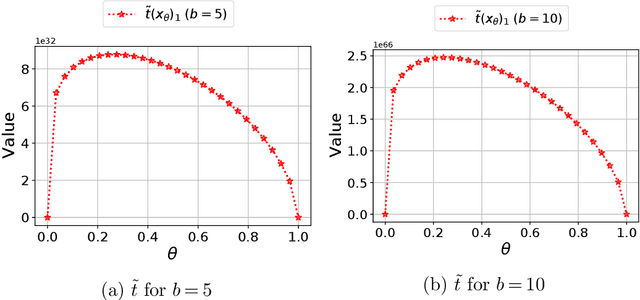

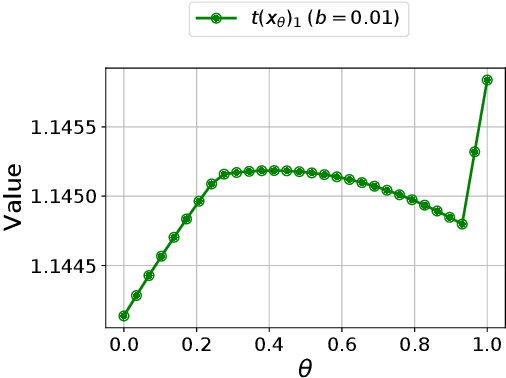

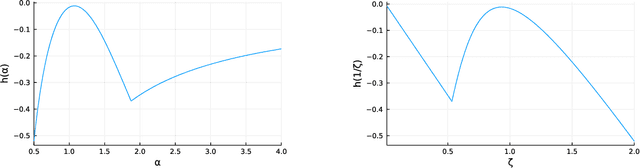

Optimizing static risk-averse objectives in Markov decision processes is challenging because they do not readily admit dynamic programming decompositions. Prior work has proposed to use a dynamic decomposition of risk measures that help to formulate dynamic programs on an augmented state space. This paper shows that several existing decompositions are inherently inexact, contradicting several claims in the literature. In particular, we give examples that show that popular decompositions for CVaR and EVaR risk measures are strict overestimates of the true risk values. However, an exact decomposition is possible for VaR, and we give a simple proof that illustrates the fundamental difference between VaR and CVaR dynamic programming properties.

Reducing Blackwell and Average Optimality to Discounted MDPs via the Blackwell Discount Factor

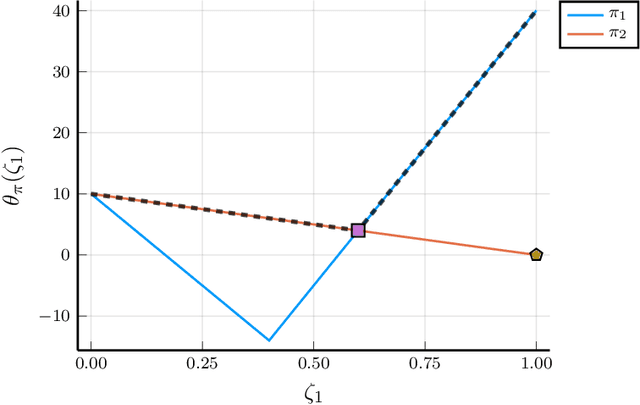

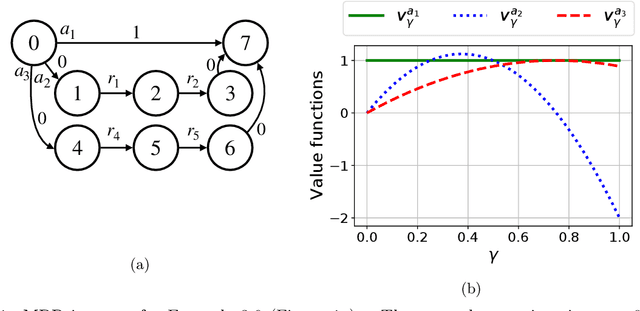

Jan 31, 2023Julien Grand-Clément, Marek Petrik

We introduce the Blackwell discount factor for Markov Decision Processes (MDPs). Classical objectives for MDPs include discounted, average, and Blackwell optimality. Many existing approaches to computing average-optimal policies solve for discounted optimal policies with a discount factor close to $1$, but they only work under strong or hard-to-verify assumptions such as ergodicity or weakly communicating MDPs. In this paper, we show that when the discount factor is larger than the Blackwell discount factor $\gamma_{\mathrm{bw}}$, all discounted optimal policies become Blackwell- and average-optimal, and we derive a general upper bound on $\gamma_{\mathrm{bw}}$. The upper bound on $\gamma_{\mathrm{bw}}$ provides the first reduction from average and Blackwell optimality to discounted optimality, without any assumptions, and new polynomial-time algorithms for average- and Blackwell-optimal policies. Our work brings new ideas from the study of polynomials and algebraic numbers to the analysis of MDPs. Our results also apply to robust MDPs, enabling the first algorithms to compute robust Blackwell-optimal policies.

On the Convergence of Policy Gradient in Robust MDPs

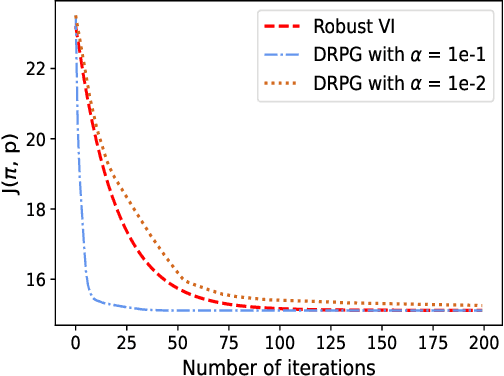

Dec 20, 2022Qiuhao Wang, Chin Pang Ho, Marek Petrik

Robust Markov decision processes (RMDPs) are promising models that provide reliable policies under ambiguities in model parameters. As opposed to nominal Markov decision processes (MDPs), however, the state-of-the-art solution methods for RMDPs are limited to value-based methods, such as value iteration and policy iteration. This paper proposes Double-Loop Robust Policy Gradient (DRPG), the first generic policy gradient method for RMDPs with a global convergence guarantee in tabular problems. Unlike value-based methods, DRPG does not rely on dynamic programming techniques. In particular, the inner-loop robust policy evaluation problem is solved via projected gradient descent. Finally, our experimental results demonstrate the performance of our algorithm and verify our theoretical guarantees.

On the convex formulations of robust Markov decision processes

Sep 21, 2022Julien Grand-Clément, Marek Petrik

Robust Markov decision processes (MDPs) are used for applications of dynamic optimization in uncertain environments and have been studied extensively. Many of the main properties and algorithms of MDPs, such as value iteration and policy iteration, extend directly to RMDPs. Surprisingly, there is no known analog of the MDP convex optimization formulation for solving RMDPs. This work describes the first convex optimization formulation of RMDPs under the classical sa-rectangularity and s-rectangularity assumptions. We derive a convex formulation with a linear number of variables and constraints but large coefficients in the constraints by using entropic regularization and exponential change of variables. Our formulation can be combined with efficient methods from convex optimization to obtain new algorithms for solving RMDPs with uncertain probabilities. We further simplify the formulation for RMDPs with polyhedral uncertainty sets. Our work opens a new research direction for RMDPs and can serve as a first step toward obtaining a tractable convex formulation of RMDPs.

RASR: Risk-Averse Soft-Robust MDPs with EVaR and Entropic Risk

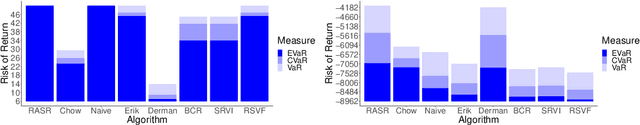

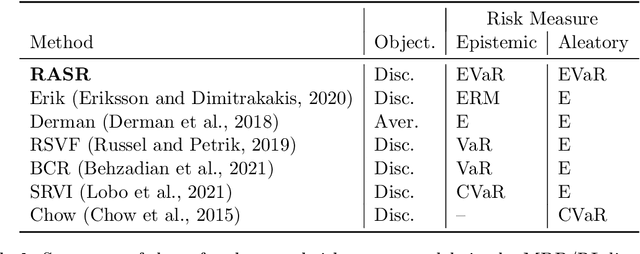

Sep 14, 2022Jia Lin Hau, Marek Petrik, Mohammad Ghavamzadeh, Reazul Russel

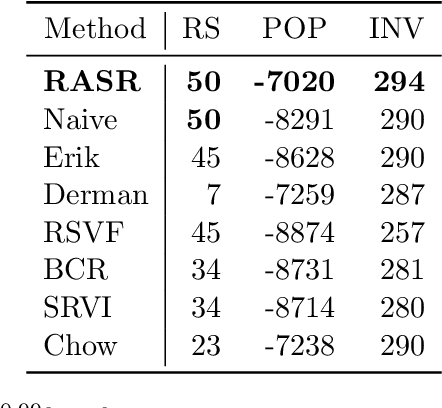

Prior work on safe Reinforcement Learning (RL) has studied risk-aversion to randomness in dynamics (aleatory) and to model uncertainty (epistemic) in isolation. We propose and analyze a new framework to jointly model the risk associated with epistemic and aleatory uncertainties in finite-horizon and discounted infinite-horizon MDPs. We call this framework that combines Risk-Averse and Soft-Robust methods RASR. We show that when the risk-aversion is defined using either EVaR or the entropic risk, the optimal policy in RASR can be computed efficiently using a new dynamic program formulation with a time-dependent risk level. As a result, the optimal risk-averse policies are deterministic but time-dependent, even in the infinite-horizon discounted setting. We also show that particular RASR objectives reduce to risk-averse RL with mean posterior transition probabilities. Our empirical results show that our new algorithms consistently mitigate uncertainty as measured by EVaR and other standard risk measures.

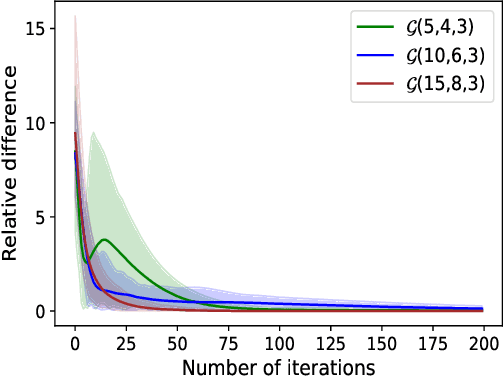

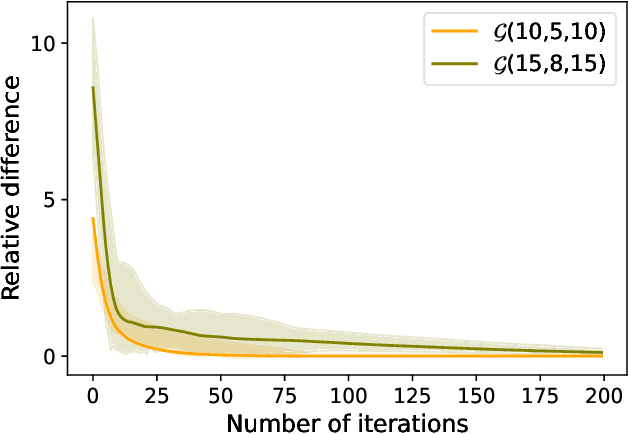

Robust Phi-Divergence MDPs

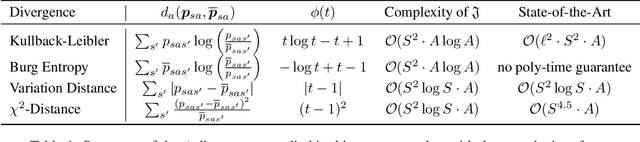

May 27, 2022Chin Pang Ho, Marek Petrik, Wolfram Wiesemann

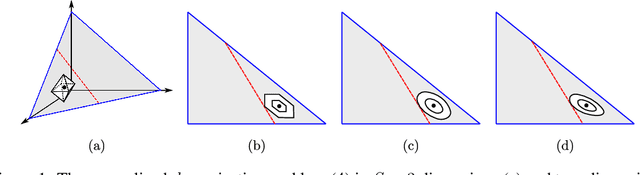

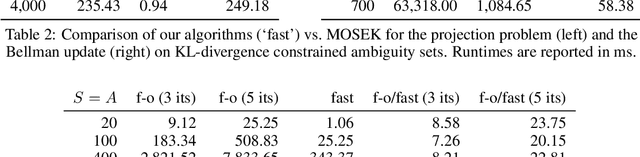

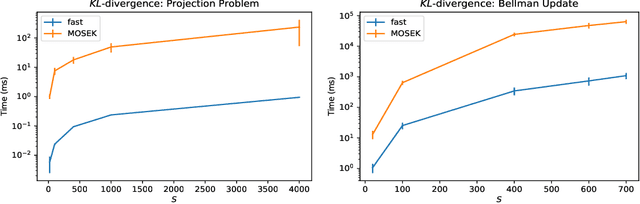

In recent years, robust Markov decision processes (MDPs) have emerged as a prominent modeling framework for dynamic decision problems affected by uncertainty. In contrast to classical MDPs, which only account for stochasticity by modeling the dynamics through a stochastic process with a known transition kernel, robust MDPs additionally account for ambiguity by optimizing in view of the most adverse transition kernel from a prescribed ambiguity set. In this paper, we develop a novel solution framework for robust MDPs with s-rectangular ambiguity sets that decomposes the problem into a sequence of robust Bellman updates and simplex projections. Exploiting the rich structure present in the simplex projections corresponding to phi-divergence ambiguity sets, we show that the associated s-rectangular robust MDPs can be solved substantially faster than with state-of-the-art commercial solvers as well as a recent first-order solution scheme, thus rendering them attractive alternatives to classical MDPs in practical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge